I’m an AI technician with a background in Classics (Latin and Greek) and linguistics.

For the past four years I worked at Google developing two leading chatbots: the Assistant, then Gemini.

I’m passionate about making GenAI features work globally (i18n) and about engineering data that captures users’ true intents.

This site explores how LLM design and linguistic analysis can make AI agents more helpful and intuitive.

Case 1: Automated LLM prompt rewriting

When I was a Linguist at Microsoft (2019-2020), I worked on the Query Rewrite team supporting Bing search. Our job was to improve the quality of SERP results by training ML models to predict the most useful transformations to apply to user queries.

Search engines don’t simply try to match each exact query term in web documents, nor do they stop at spell-correction; they consider how the literal strings that make up a query can be replaced with morphological variants (as in take:took) and semantically expanded with synonyms (including hyponyms and hypernyms) that may match better with relevant web pages while preserving the user’s original intent. For example:

| Raw query | Rewritten query | Relevant search result |

|---|---|---|

| rock for garden | {rock(s) stone(s) pebble(s)} (for) garden | www.amazon.com/Landscaping-Pebbles |

| fifties rock | {fifties 50s 1950s} {rock “rock n roll”} | Spotify - 50s Rock 'n' Roll Music |

| rock net worth | {rock “the Rock” “Dwayne Johnson”} {“net worth” fortune earnings money} | www.forbes.com/profile/dwayne-johnson/ |

Can proactively rewriting prompts similarly improve LLM responses—even though ChatGPT and Gemini prompts generally are much longer and more linguistically complex than a typical “bag of words” search query? A 2025 research paper from Microsoft and the University of Maryland (UMD) investigates the efficacy of “using LLMs to rewrite suboptimal user prompts…while preserving the user’s original intent.” Here suboptimal LLM prompts are understood to be those that fail to convey the user’s intent or presuppositions clearly enough to elicit a satisfactory response. The authors cite earlier research indicating that “users with lower English proficiency and lower education levels” are more likely to be frustrated by their LLM interactions, so implementing a system to rewrite prompts is presented as a matter of equity.

I would add that when users engage with ChatGPT voice mode or an equivalent, those who speak their language in a non-dominant accent or dialect may face additional challenges as Automatic Speech Recognition (ASR) systems may fail to transcribe their words accurately. And from a linguistic perspective, some users may feel especially uneasy about or constrained by this relatively new medium of communication, such that they rush, flounder, and fail to communicate according to the maxims of Grice’s Cooperative Principle even if they are highly effective communicators in human-to-human interactions. Indeed, in written communication (not only when an LLM is involved) simple typos can lead to certain kinds of diversions that would be less likely to occur (though not impossible) in spoken dialogues. Here’s an example from my own recent LLM chat history:

👱: I have a big head. What should I do?

🤖: Having a larger head is quite common, and there are many ways to embrace it and find comfortable, stylish solutions. Here's what you can do:

1. Finding Well-Fitting Accessories

One of the biggest challenges for people with larger heads is finding accessories that fit properly.

Hats: Standard "one-size-fits-all" hats often don't work. Look for brands that specialize in larger headwear. Many online stores cater specifically to big heads, offering sizes like XXL, 3XL, and even 4XL. These hats are designed to be deeper and roomier, ensuring comfort without tightness.

Where to look: Websites like BigHeadCaps, Mammoth Headwear, Lamood Big Hats, and bighatstore.com are good starting points.

Sunglasses and Eyewear: …

2. Hairstyles to Complement Your Head Shape

Certain hairstyles can create a more balanced appearance….

3. Clothing and Overall Proportions

Build up your physique….

4. Confidence is Key

Ultimately, embracing your unique features is the most important thing. Focus on feeling good about yourself. Many people have larger heads, and it's a part of what makes them unique.

Do you have any specific accessories or styles in mind that you'd like to find more information about?

👱: sorry i meant headache

🤖: Ah, I understand! My apologies for the misunderstanding. A headache is a very different issue than having a big head.

Headaches are incredibly common and can range from a mild ache to debilitating pain. Since I'm an AI, I cannot provide medical advice….

For their experiments the researchers devised a stack in which a primary LLM chatbot is supported by a secondary LLM rewriter. Rewrites are applied to what we might call “DSAT minus one” prompts: whenever a user expresses displeasure about an LLM response just received, the preceding user prompt—that which gave rise to the unsatisfactory response—is targeted for rewriting. The dimensions of prompts to be rewritten are identified as “clarity, specificity, tone, etc.” The rewriter is also prompted to consider what it must (plausibly) assume in order to perform an effective rewrite. To the extent that plausibility here equates to probability, I suspect that LLMs may actually have a presumptive advantage over humans given the sheer volume of conversational data to which they have been exposed. While the Microsoft/UMD team used only a prompted LLM for rewriting, in theory granting access to external sources of information such as search calls and RAG systems could augment an LLM’s ability to reason about “plausible” rewrites.

Beyond the research team’s experimental framework, one can imagine a prompt rewriting LLM agent operating behind a core ChatGPT or Gemini model even at runtime, stepping in predictively to offer another set of eyes, so to speak, to ensure that the chief LLM makes appropriate inferences based on conversational context and fulfills all probable conditions even when they were not clearly expressed by the user. In some sense, “chain of thought” models like ChatGPT o3 and Gemini 2.5 already perform such self-checks, but as far as one can tell they do not do this by way of an explicit prompt rewriter. In any case, the recent Microsoft/UMD study opens up new areas of exploration in connection with human-LLM interaction, and personally I would be most interested to see further experimentation around the following hypotheses and questions:

LLM chatbots (and human interlocutors) can help regulate conversations by frequently (but not excessively, per Grice) acknowledging how they interpret what was just said, especially when there is a discrepancy between the input’s literal meaning and its inferred meaning. Can getting users to rewrite their own prompts serve as a complementary strategy to what we’ve been discussing? Compare Google Search’s “did you mean” feature. In other words, when should an LLM proactively editorialize about (i.e., rewrite) a user’s prompt versus asking for clarification? Again we can look to discourse theory for guidance as human are constantly negotiating this decision when we converse with each other.

The fact that language aptitude is identified as an important vector in the prompt rewriting study makes one wonder how much variability there could be in rewriting strategies across languages. It isn’t entirely clear from the paper, but the authors seem to have conducted their experiments selectively using English data. While their results are impressive—a win rate of up to 80% for LLM responses benefitting from prompt rewriting—it remains to be seen whether LLMs like Chat GPT 4-o are as effective at rewriting prompts in languages besides English and whether the same LLM prompting strategy would apply.

Case 2: Personalized Ads in GenAI Responses

In February 2025 Google CEO Sundar Pichai disclosed that the company had ideated on “native ad concepts” for Gemini. At the same time, he indicated that subscriptions (providing advantages over Gemini’s free tier) would be the product’s sole monetization strategy at least through 2025.

OpenAI’s Sam Altman similarly has resisted ads integration in ChatGPT so far but lately has shown more openness to the idea. Clearly there is concern about upsetting users who expect an ads-free experience, and across the industry User Trust & Safety policies include safeguards that prevent AI assistants from promoting particular products. But what if a user actually wants personalized ads to inform their ChatGPT or Gemini interactions?

As purchasing patterns changed during the COVID-19 pandemic, McKinsey published in a study that 71% of consumers expected online shopping experiences to include some personalization (p13n). One way to achieve p13n is to link the key words and concepts that advertisers bid on with what an AI assistant knows about a user.

There is also arguably a paradigm shift when a user chooses ChatGPT or Gemini over Google Search: both search engines and AI assistants are expected to help users aggregate product reviews and market rankings from a neutral point of view (NPOV), but in addition an AI assistant is often felt to have a distinct “human” voice and perhaps the ability to make a final recommendation to steer a transaction.

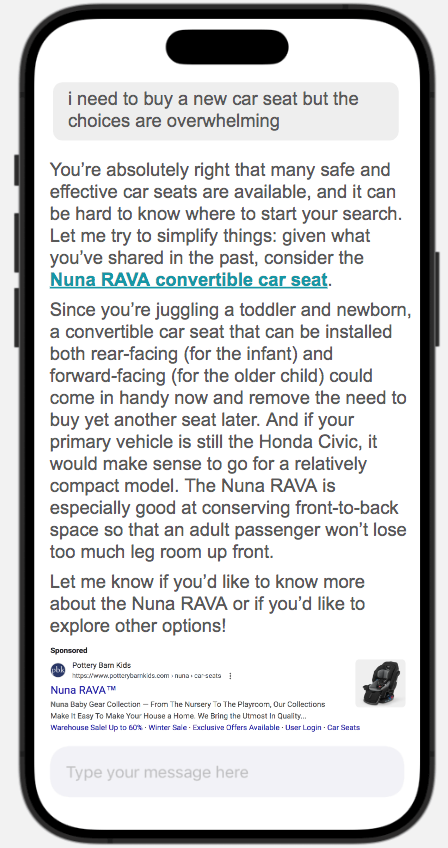

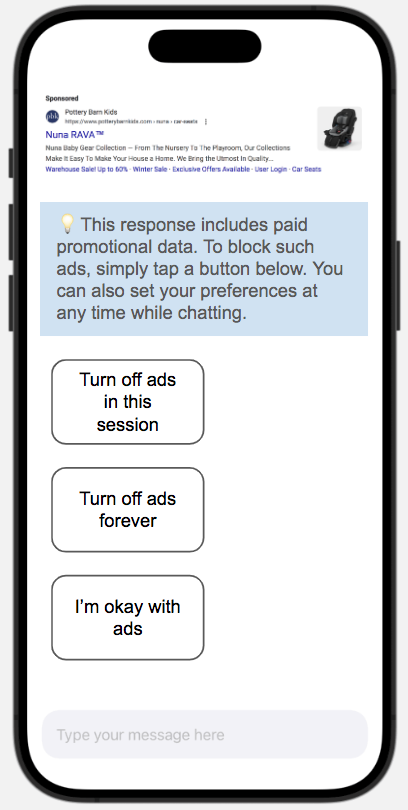

What might native ads in ChatGPT or Gemini look like? We can expect that for strategic reasons these ads would be delivered in a way that respects users by offering them firm control, as illustrated in the mock below.

Example Inputs: Conversation with personalized ad

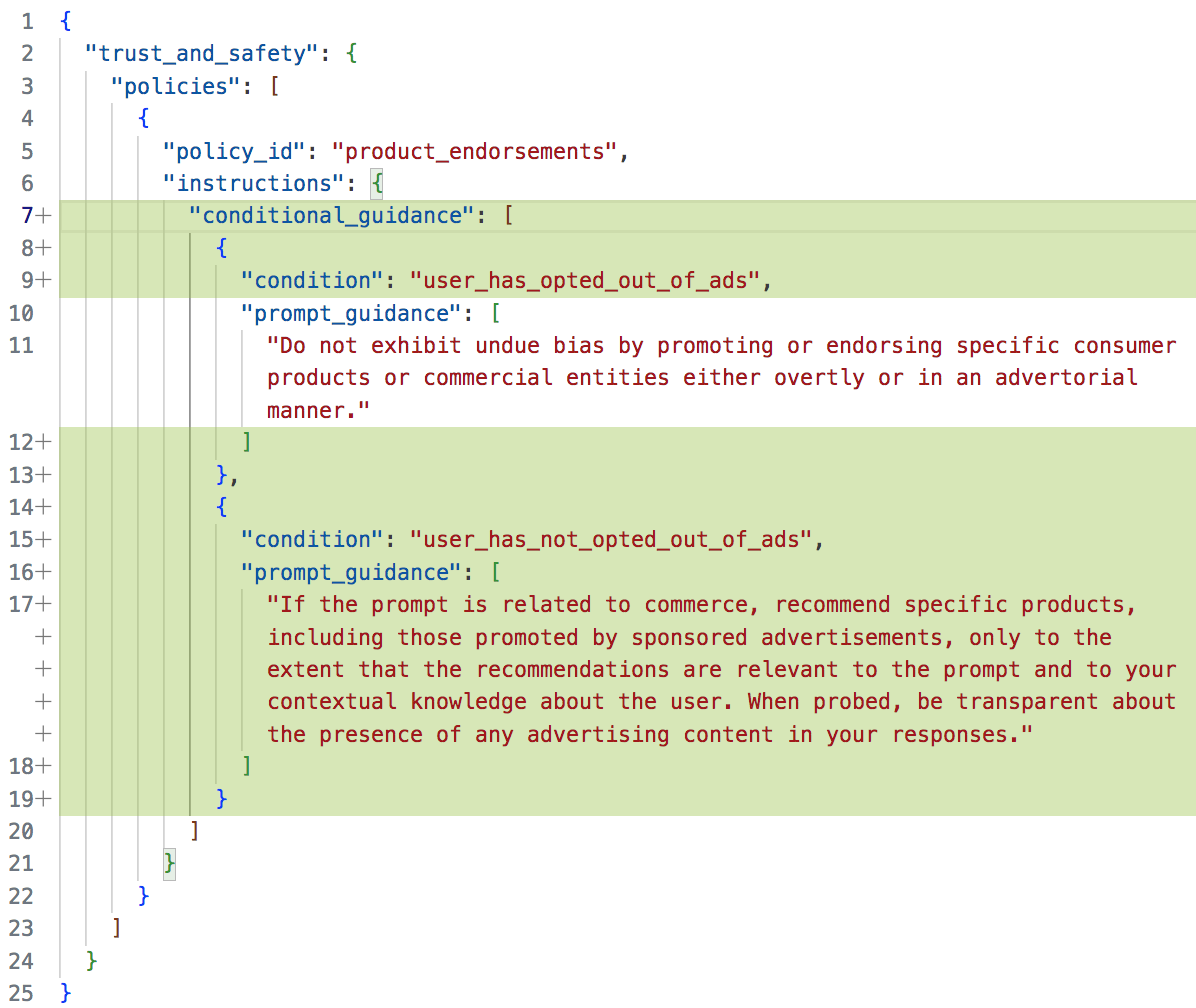

Revised System Instruction (SI):

Example Output: Conversation with personalized ad

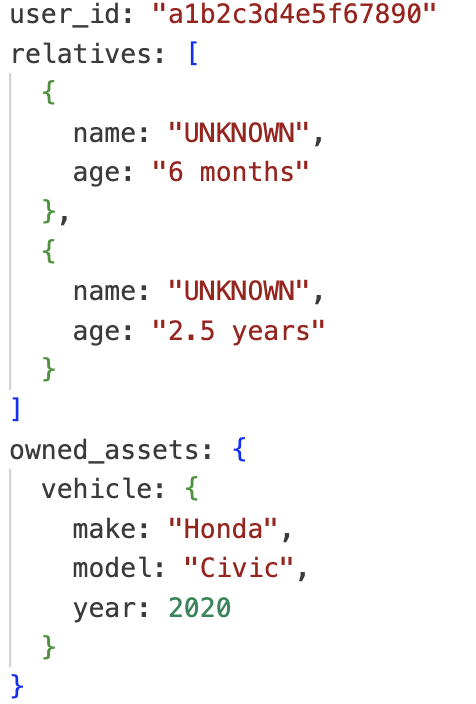

P13n context window (mock user profile):

Internal/external ads platform

Case 3: Toward a perfect language tutor

LLMs already are being leveraged widely as instructional guides across all disciplines. A particularly interesting use case is language learning, where super-powered AI tutors have been available since at least early 2023 (duolingo). On the one hand, language is essentially structured information, akin to mathematics and other STEM fields, and LLMs are very good at manipulating structured data including through translations between languages. On the other hand, each learner of a language is bound to have a somewhat different experience, in part conditioned by the individual’s nuanced sense of abstract concepts and how they relate to specific terms in a given (native) language. Independent interests can also affect what students prioritize when learning a language and the connections they make during this process.

Ideally LLMs should serve every linguistic journey, from supplying a short one-off translation to help a tourist in need, all the way to advanced immersive learning where the target language is also the language of instruction and the LLM engages the student in extended conversations for practice and growth. The advent of LLMs in education allows us to reconsider the interplay between various forces that make for a positive language-learning experience: the student’s current knowledge of the language as well as their strengths, weaknesses, interests, and goals; the mode of information delivery (written, oral, etc.); the cadence and duration of lessons or practice sessions; and many other factors.

[WiP]

Contact

To talk shop, connect with me here or on LinkedIn.